Page Content

Speech recognition technology (e.g. Dragon, Siri) can be used as both an accommodation for certain disabilities and as a method to facilitate the caption/transcription process. However it is important that the conditions for use be optimized or the technology will not meet your intended needs.

Common Uses

As an Accommodation

Speech recognition software is a valuable tool for some users who have difficulties operating a keyboard. It is also used by people who commonly dictate notes to generate documents or operate software.

To Capture Transcripts/Captions

As will be described later in this page, it is possible to use speech recognition to capture a caption or transcript, but there are challenges that must be overcome to ensure an accurate transcription.

About Dragon

The most prominent vendor for speech recognition products on a desktop are the Dragon line from Nuance. Some Speech recognition is also built into to different operating systems such as Windows 7, recent versions of OS X and of course the iPhone Siri, but Dragon is often considered to be one of the most accurate speech recognition engines.

Dragon can used to both capture spoken text in a document or to use spoken commands in different software. Dragon is sold with a specialized microphone and requires a user to "train" the system on his or her voice to achieve the highest levels of accuracy.

To facilitate recognition across dialects, Dragon ships with five (5) English variants

- U.S. (and Canada) – the only version which defaults to U.S. English spelling.

- U.K.

- India

- Australia

- S.E. Asia

Dragon Tutorials

- Lynda.com Essential Dragon (Penn State Only)

- Nuance Instructional Videos

- Dragon for Dummies

- Dragon Cheat Sheets

The Challenge of Speech Recognition

When Speech Recognition Fails

Although systems such as YouTube support speech recognition, results cannot be guaranteed. Even videos with high quality audio can result in unintentionally humorous errors such as in this YouTube generated automatic caption about the Flipped Classroom. Even a few errors can make a caption difficult to parse.

Note: This video also includes an actual caption track.

Automatic captions may also not display as a coherent phrase.

Speech Signal

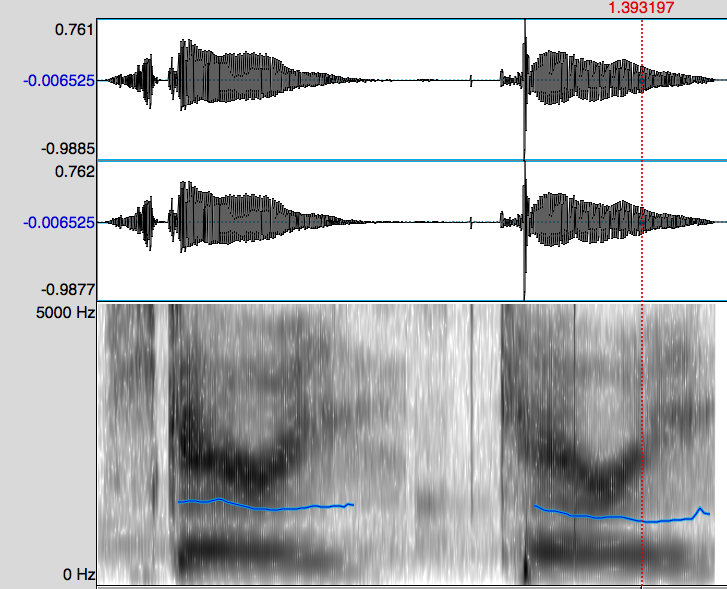

To understand why speech recognition isn’t perfect yet, it’s important to consider the task it’s trying to perform. When people hear speech, their minds are able to convert the sound into groups of letters and words.

The reality is that speech is a continuous sound waves with very subtle acoustic transitions for different sounds (see images below, the bottom ones are the spectograms that phoneticians use). Your ears and brain are doing a lot of processing to help you understand that that person just said, and some of it is based on being able to match sounds to a mental dictionary.

To hear what language acoustic signal really sounds like, try listening to a language you don’t know well. It’s often difficult to distinguish words in this case. Part of knowing a language is being able to process the speech signal.

Crossing Dialects

Speakers in different dialects have variant sound systems. As this video about the Northern Cities and other vowel shifts in the U.S. the same sound could be interested as black in Standard U.S. English or block in some parts of the Great Lakes area.

Two Optimal Scenarios

Speech recognition is the most effective in these situations

- Limited vocabulary – This includes phone menus and kiosks.

- Specific speaker – Speech recognition is effective when trainied on a single voice, preferably tied to a system where a dictionary can be modified over time.

Note that any video with multiple speakers will likely fall outside these guidelines.

Captioning Tips

To optimize speech recognition for captioning, consider these tips

- Review generated transcripts for accuracy. Even minor errors can impede comprehension (they are not like typos where the original word can often be deduced).

- The better the audio quality, the higher the quality. And unfortunately, the closer to a standard dialect a speaker is, the more accurate the results.

- You can leverage Dragon’s training in two ways.

- A single speaker can repeat what is being said into Dragon after the fact.

- A speaker profile (e.g. an instructor) can be provided by Dragon.